Data security is one of the biggest issues with modern AI, hindering adoption because of fears and restrictions. And these fears are not completely unfounded, AI systems are still new and developing. They are constantly hungry for data to ingest and learn from. That data is stored, and it may be used for other to learn from, or worse, it may appear in places it should not. There are several stories online where public chatbots disclosed confidential information they should not.

But there are things you can do to protect your information. First is checking the policies of the system you intend to use.

Tuning Your System

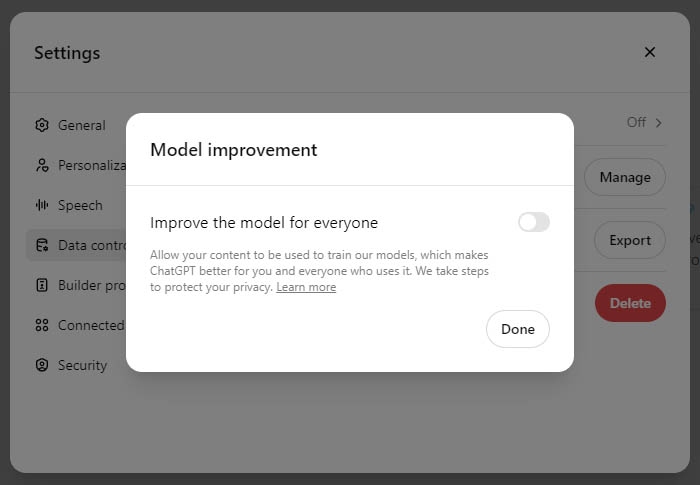

Some systems do have an opt out option, which means that you can set the system up to not share and reuse your data and prompts.

ChatGPT, Claude, Perplexity, Gemini and the Adobe applications all have settings you’ll need to adjust.

In ChatGPT this can be found under Settings -> Data Controls -> Improve the model for everyone, set to Off.

Co-Pilot is a bit trickier: the version for private users does not have an opt out feature, whereas the version for business (and school) users states that data falls under the Commercial Data Protection and is not shared.

Grammarly did not provide an opt out option at the time of writing this and Meta states that while they protect your data, information may be shared with partner companies.

All of these systems do issue warnings about confidential information:

“Please don’t enter confidential information in your conversations or any data you wouldn’t want a reviewer to see or Google to use to improve our products, services, and machine-learning technologies”. – Google Gemini Privacy Hub

Data Anonymisation and De-Identification

If you want to upload company or private confidential data you can take additional steps to protect sensitive information. Look at the data and check if you have anything confidential in there, particularly Personally Identifiable Information, PII. If you upload your sales data, you may want to check if there are company names in the data.

If you have any sensitive information, you can use one of the following methods:

- Suppression: remove the respective column(s) – if AI can do its job without it, it’s best to remove it.

- Masking and Pseudonomisation: replace the data with coded placeholders that you can re-substitute later.

- Generalisation: reduce the data precision from exact to broader information

Data security is a sensitive topic. There are some potential dangers, but there are measures you can take to use AI safely.